In my previous blogs, we explored what AI is and why you don’t need to be afraid. We then examined concerns about privacy and job security. In this article, I’d like to talk about some applied concepts. If Generative is co-intelligence, prompting is how we can communicate with this intelligence to get reliable, consistent, and improved results.

In the Beginning…

Like everyone, prompting was not something I could naturally get right on the first try. I’d type simple questions and receive responses that were fine but not incredible. It was synthesizing some thoughts I was hoping to get back, but I knew there was a chance for the output to be better. So I attended workshops, tested methods, learned from mistakes, and from many, many interactions. I’ll share some of the methodologies I’ve found most useful to invite this co-intelligence into my workflow.

Prompting and how it works

Prompting is how you communicate with a Large Language Model (LLM). You provide input and direction, and the AI responds as best it can. The consistency and quality of the LLMs response depend on how things are phrased and placed in a sentence. This is because, behind the scenes, the LLM parses the input you provide into something called a token.

Tokens are not full words but pieces of them that are assigned weights and indexed to create meaning for the model. This is where prompt engineering comes in. Prompt engineering is the practice of creating organized input that makes it easier for an AI to follow and respond consistently and meaningfully. It’s not necessarily about being technical, but about being intentional with how you organize it. Think of it like a system of communication or a syntax. A prompt with a structure that includes who the message is for, what the goal of the communication is, and how you’ll measure success will always return a better result.

Co-intelligence across modalities

There’s not a single way to work with generative AI. It’s evolving rapidly and showing promise for gains in more and more mediums. Here’s a quick look at some of the main categories today.

- Text to text

ChatGPT-4o, Claude 3 Opus, and Microsoft Copilot are excellent tools for writing, summarizing, brainstorming, or problem-solving. They remember your context, learn your preferences, and can even read files like PDFs and spreadsheets.

- Text to image

Midjourney has been a leader in this space with the ability to create fantastic images and photorealistic detail. ChatGPT’s latest image-generating model, embedded in 4o, has taken a major leap forward too. It brings very granular detail into image generation—you can even get it to write letters accurately now. Ideogram is also a very real player in this space for consistent branding across images.

- Text to Video

Platforms like Runway, Pika, and Sora are pushing the envelope here. You can generate short videos using text and images as the input. It’s very useful for quick ideation and explainer content.

- Text to voice

ChatGPT’s voice capabilities support a full back-and-forth conversation. ElevenLabs allows for a very human-like voice to speak over the text provided. Use cases could include creating a personalized podcast to teach you auditorily while driving, after submitting a paper.

- Multimodal Thinking

As the models have progressed over the last few years, flagships like 4o and Opus can interact with text, images, audio, tables, code, and more. They can interpret charts and read spreadsheets. Perplexity is also a strong research assistant, pulling live results with inline citations for further research and context.

An important note about this, (a reiteration from previous blogs) is that it’s not about replacing your work, it’s about extending it and making you more capable. AI or LLM tools can support you in the same way a coworker would. We all have specialties, and with the help of co-intelligence, you can become a more well-rounded worker.

Three Text Prompting Approaches

Here are three prompt engineering approaches I’ve found useful and have put into practice for my communication with LLMs.

- Structure Prompting (Ali K. Miller’s Approach)

Ali’s methodology has become popular because she’s a major GenAI voice in the community. She uses labeled parts to frame a repeatable prompt:

#Identity: Who the AI is (“You are a History Professor”)

# Task: What it needs to do (“Describe why Julius Ceasar was influential to modern Europe”)

# Audience: Who this is for (“A 6th grader who’s interested in European history”)

#Goal: What you want to achieve(“To be able to share a few key points to my child for our trip in England”)

#Success Metrics: What you’ll evaluate (“So I can help my family understand ancient history”)

This structure removes ambiguity from the model. It tells it exactly what you’re trying to get back from it. The AI knows what role it’s supposed to play and what success looks like.

- Chain-of-Thought Prompting

Instead of giving the AI a single complex request, you break it down into smaller logical parts.

Start with: “Summarize this article”

Then: ”Identify three perspectives on this topic.”

Then: “Break them down into a table with pros and cons”

Finally: “Decide what you think is the best based on the pros and cons list and summarize why.”

This helps with clarity, especially if the task is complex with several different steps. It provides checkpoints to maybe change your perspective on where to go next. This approach allows the AI to become a thinking partner.

- Dictation Prompting (Malcolm Wurchota’s Style)

“Prompt like a 6-year old”, Malcolm’s style is to dictate everything because we can speak at 150 words per minute vs the average typing of 50 words per minute. The trick to this is to use the dictation hotkey of Windows plus H. Because it’s much quicker you naturally add more context to the prompt and emotional queues, which has been proven to provide more thoughtful responses like “Can you make this feel welcoming?”.

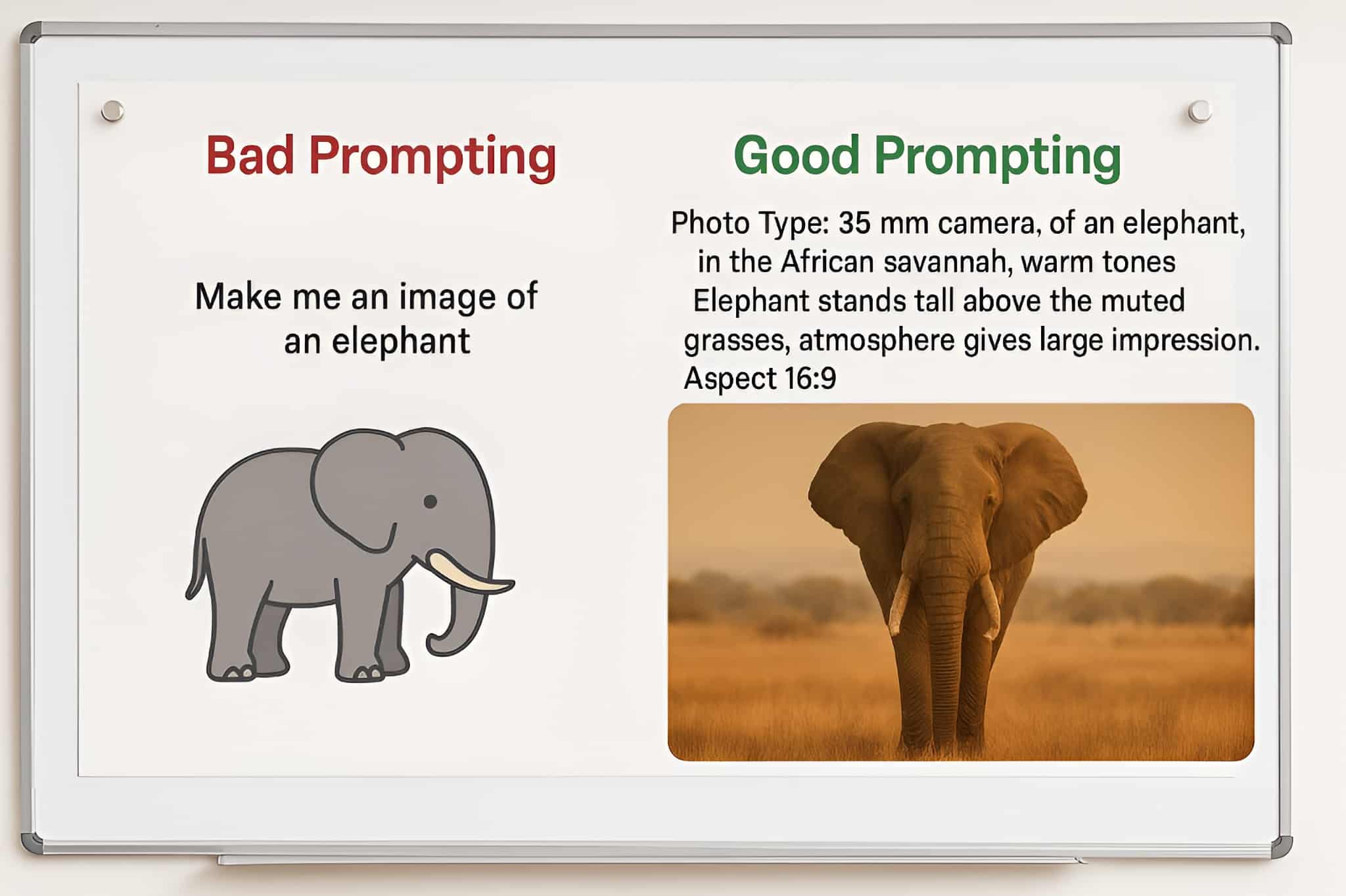

Prompting for Visual Work Text to Image Text to Video

There are a few thoughts on this, I find Rory Flynn’s Framework as one of the best for guiding image generation for Midjourney, and video generation in Sora. In Midjourney the first words are weighted the most, so typically, you lead with the type and medium you’re aiming for.

- Photo Type: Portrait, 35 mm camera, wide angle shot, drone shot etc.

- Subject: The main focus

- Color Palette: The specific tones or mood

- Environment: Where it is

- Mood and atmosphere: is it Calm, overwhelming, gritty…

- Composition: the Framing or the Angle

- Lighting: Where the light sources are coming from, and how intense

A fully built prompt could look like this.

Photo Type/Shot: editorial portrait, Subject: male, hooded figure, fantasy rogue, unable to see face, Color Scheme: dark colors, greens, browns, Environment: in a tavern, crowded, with many mystical humanoids, Mood: loud, Atmosphere: overwhelming, Composition: 16:9

When you’ve built repetition, you can drop the labels. They are only there to help you think clearly. The reason why this much description helps is that the image generation models were trained on huge libraries of images that are tagged with a ton of metadata. So if you can be very descriptive in the prompt, you get a much better result.

Why This Matters

Though the methods outlined above are very methodical, in practice, if you use these techniques over and over again, it becomes much easier to put together a prompt and takes less time. I know you’ll be happy with the results if you create a system of your own or use parts of these. Remember that AI is going to be more and more capable, so the best time to learn is now.

If you want to learn more about prompting or are curious about how AI could benefit you, and your company, connect with us to learn how CLM can support you and your team as you integrate AI into your workflow.